AI needs lifelong learning, says senior expert

Xinhua

28 Jan 2026, 15:15 GMT+10

SINGAPORE, Jan. 28 (Xinhua) -- What's next for artificial intelligence (AI)? For Stephen Smith, a senior U.S. robotics researcher, the answer may lie in the way humans learn: lifelong learning.

"What if you think about true intelligence and how humans learn? They learn very differently. Humans learn their whole lives," Smith, president of the Association for the Advancement of Artificial Intelligence (AAAI), told Xinhua, adding that most AI today, built on large language models (LLMs), cannot truly learn over time, a gap that frames the questions to drive future research.

Speaking during the 40th Annual AAAI Conference on Artificial Intelligence in Singapore, Smith said the most visible breakthrough in recent years is the rise of LLMs. These deep-learning systems, trained on massive datasets, can understand and generate text -- and increasingly, other types of content. "A lot of research is riding that wave or building on it," he said.

Smith traced the rise of LLMs to longer-term shifts in computing and AI research. Over decades, growing computing power and changes in AI reasoning methods gradually pushed the field toward deep learning. That shift set the stage for the rapid evolution of LLMs.

"The innovations behind LLMs -- and deep learning more generally -- have really energized the field," he said. Computer vision has advanced dramatically, and robotics has benefited as well.

A new frontier is agentic AI. Unlike chatbots, which respond mainly to prompts, agentic AI focuses on autonomous decision-making. They are built around agents -- software entities that act independently.

Smith sees cooperative systems, where multiple agents work together, as likely to make significant gains in the next few years.

Many of these systems currently use LLMs for high-level decision-making, but "today's LLM technology still relies mainly on pre-programmed coordination plans," he said. "The challenge is to create teams of agents that can form, adapt, and plan together to solve larger, more complex problems on their own."

At Carnegie Mellon University, where Smith is a research professor, his lab is exploring this. One student studies cooperative autonomous driving in urban environments. Each vehicle carries an LLM and shares sensor data with nearby cars.

"They collaborate to figure out: Is there another vehicle behind you? Or a pedestrian sneaking out that I can't see?" Smith said. Vehicles perceive their surroundings, predict movements, and plan their own paths. Early results suggest the approach can enhance safety, and showcase what cooperative, agent-based AI might do.

Still, Smith said, LLMs have limits. "There are a lot of things they don't do well. Some big tech companies would say it's just a matter of tweaking, but this is not likely," he said.

To understand why, Smith explained how most LLMs are built. Models are trained on massive datasets over long periods, producing a base model that is then "frozen." Fine-tuning or reinforcement learning can adjust behavior, but it does not expand the model's knowledge in new directions. "At the same time, fine-tuning the LLMs can disrupt them. It's very much an art," he noted.

Another key limitation is causal reasoning. LLMs model correlations, not cause-and-effect. Planning is difficult. "It's a capability of human reasoning that they don't really have," he said. Some systems appear to plan, but only because the steps to accomplish a task are pre-programmed. They don't ask, "If I do this, what happens next?"

This gap, Smith added, helps explain why LLMs sometimes produce ridiculous answers.

Embodied AI, including robotics, offers a partial solution. Machines that interact with the world can experiment and learn. "You can drop things and see what happens, or take actions and observe the results," Smith said. This hands-on experience helps fill knowledge gaps and understand causality.

Think of a child playing with building blocks. With a few pieces, the child stacks, knocks down, and rearranges structures, gradually discovering what works. Similarly, AI's lifelong learning may rely on small, well-chosen data and exploration, rather than massive datasets. "That's essential to the concept of lifelong learning, a feature of human intelligence," Smith said.

"Humans don't read an encyclopedia to acquire common-sense knowledge about how the world works," he added.

These gaps make Smith cautious about "claims of artificial general intelligence (AGI)" -- AI with human-level cognitive abilities. Early chatbots showed promise. "There are problems LLMs have solved that you would imagine would require the intelligence of a graduate student working in that area," he said.

But core challenges remain: LLMs don't continually learn, and they cannot yet revise understanding as humans do.

He illustrated human flexibility with a scenario: a phone. Normally, it's for calling, but trapped in a burning building, it could become a tool to break a window. Humans can repurpose objects on the fly, but can AI?

"I just think AGI is not around the corner. From a research perspective, there are plenty of challenges left," he said. Progress will come, but not quickly. "The capabilities or the types of things that machines can do, that humans can now do -- they will increase and increase. I think we've scratched the surface of the level of intelligence and autonomy that machines can potentially have."

Smith also raised more immediate concerns: AI-generated misinformation and altered reality. "We need appropriate guardrails," he said.

Share

Share

Tweet

Tweet

Share

Share

Flip

Flip

Email

Email

Watch latest videos

Subscribe and Follow

Get a daily dose of Milwaukee Sun news through our daily email, its complimentary and keeps you fully up to date with world and business news as well.

News RELEASES

Publish news of your business, community or sports group, personnel appointments, major event and more by submitting a news release to Milwaukee Sun.

More InformationInternational

SectionUS judge stops bid to end legal status of 8,400 kin of US citizens

BOSTON, Massachusetts: A federal judge has rejected attempts by the Trump administration to terminate the legal status of more than...

Jail for Pakistani human rights lawyers over social media posts

ISLAMABAD, Pakistan: Two human rights lawyers were over the weekend sentenced to 17 years each in prison by a Pakistani court over...

Spanish lawyers drop sexual assault complaint against Julio Iglesias

MADRID, Spain: Investigations into accusations of sexual assault by Julio Iglesias in the Bahamas and the Dominican Republic have been...

Canadian ex-Olympic snowboarder arrested for drug smuggling, killings

ONTARIO, California: Former Canadian Olympic snowboarder Ryan Wedding, who was accused of moving 60 tons of cocaine and was responsible...

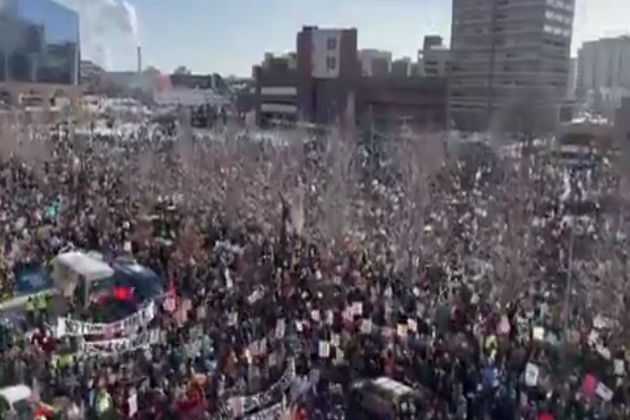

Minneapolis residents protest in the bitter cold against ICE

MINNEAPOLIS, Minnesota: Undeterred by the sub-zero conditions in Minneapolis, thousands of people marched through the streets on January...

Meta halts teens’ AI characters use as scrutiny over kids grows

MENLO PARK, California: With scrutiny of tech platforms' impact on children intensifying, Meta Platforms is temporarily shutting down...

Wisconsin

SectionFive-year-old among four children detained by US immigration officials

COLUMBIA HEIGHTS, Minnesota: A five-year-old boy was among four children detained by U.S. immigration officials from the Minneapolis...

Court allows arrests, tear gas at Minneapolis demonstrations

ST. LOUIS, Minnesota: A U.S. appeals court this week overturned a lower court's order restraining federal officers from arresting or...

U.S. stocks end week mixed, dollarv takes another dive

NEW YORK, New York - U.S. stock indexes delivered a mixed performance Friday to close out the trading week, with the Dow Jones Industrial...

Winning Pistons make free throws, Nuggets don't at dramatic finish

(Photo credit: Ron Chenoy-Imagn Images) Cade Cunningham had 22 points and 11 assists, Tobias Harris also scored 22 points, including...

Sixers show shooting touch as Bucks continue slide

(Photo credit: Bill Streicher-Imagn Images) Paul George scored 32 points and Joel Embiid added 29 to highlight the Philadelphia 76ers'...

Wisconsin, Minnesota try to shrug off painful home losses

(Photo credit: Matt Krohn-Imagn Images) Wisconsin has not lost back-to-back games at home in nearly three full seasons. Yet those...